This article is part of a series on various approaches to Posthuman Security.

Thinking about ‘posthuman security’ is no easy task. To begin with, it requires a clear notion of what we mean by ‘posthuman’. There are various projects underway to understand what this term can or should signal, and what it ought to comprise. To bring a broadened understanding of ‘security’ into the mix complicates matters further. In this essay, I argue that a focus on the relation of the human to new technologies of war and security provides one way in which IR can fruitfully engage with contemporary ideas of posthumanism.

For Audra Mitchell and others, ‘posthuman security’ serves as a broad umbrella term, under which various non-anthropocentric approaches to thinking about security can be gathered. Rather than viewing security as a purely human good or enterprise, ‘posthuman’ thinking instead stresses the cornucopia of non-human and technological entities that shape our political ecology, and, in turn, condition our notions of security and ethics. For Mitchell, this process comprises machines, ecosystems, networks, non-human animals, and ‘complex assemblages thereof’. Sounds clear enough, but this is where things begin to get complicated.

First, what exactly is ‘post’ about the posthuman? Often lumped in together under the category of ‘posthumanism’ are ideas of transhumanism, anti-humanism, post-anthropocentrism, and speculative posthumanism.[1] Each variant has different implications for how we think ‘security’ and ‘ethics’ after, or indeed beyond, the human. Furthermore, one must ask whether it is even possible to use concepts of security, ethics, and politics after or beyond the human. These concepts are not only social constructs; they are also fundamentally human constructs. To think ‘after-the-human’ may well then render these concepts entirely obsolete. And as if this was not enough to wrap one’s head around, we further may need to clarify whether it is post-humanity or post-humanness we aim to understand when we strive to think beyond the human. It appears, then, that the posthuman turn in security studies risks raising more questions than it helps to answer. A clarification of how these terms are used in the literature is thus necessary.

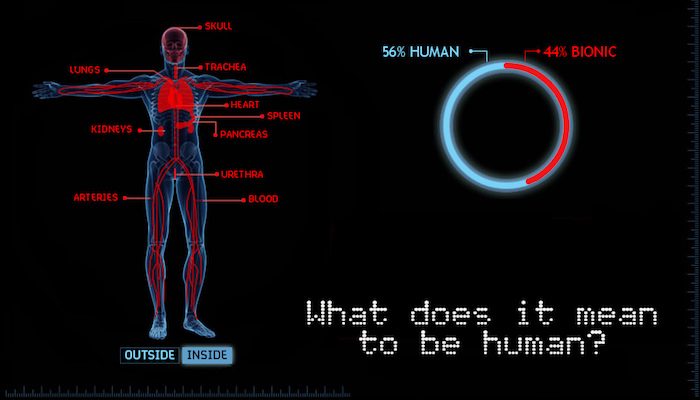

To date the most clearly defined strands of posthumanist discourse are ‘critical posthumanism’ and ‘transhumanism’, as elaborated in the work of Donna Haraway, Neil Badminton, Ray Kurzweil, and Nick Bostrom, among others. Both discourses, although very different in their approach and focus, posit a distinctly modern transformation through which human life has become more deeply enmeshed in science and technology than ever before. In this biologically informed techno-scientific context, human and machine have become isomorphic. The two are fused in both functional and philosophical terms, with technologies shaping human subjectivity as much as human subjectivities shape technology. The question of technology has thus, as Arthur Kroker puts it, become a question of the human. The question of the human, however, looks decidedly different when viewed through modern techno-scientific logics of functionality and performance. Indeed, as contemporary life becomes ever more technologised, the human appears more and more as a weak link in the human-machine chain – inadequate at best, obsolete at worst.

The interplay between man and machine has, of course, a long-standing history that can easily be conceived of in posthuman terms. In the present context, doing so would highlight our submission to technological authority in apparently human endeavours such as war and security. This is particularly important given the rapid proliferation of new military technologies, which strive for ever-greater levels of autonomy and artificial intelligence. Such technologies are extending, enhancing, and refiguring new machine-humans. It is therefore crucial, at least from a critical perspective, to get a handle on the kind of machine-human subjectivities our new ways of war and security are producing.

In a fervent drive for progress, scientists and roboticists work feverishly to replace what we hitherto have known and understood as human life with bigger, better, bolder robot versions of what life ought to be – fully acknowledging, if not embracing, the possibility of rendering humans increasingly obsolete. Machines are designed to outpace human capabilities, while old-fashioned human organisms cannot progress at an equal rate and will, eventually, “clearly face extinction”.[2] Recognising this, technology tycoon Elon Musk has recently issued a dire warning about the dangers of rapidly advancing Artificial Intelligence (AI) and the prospects of killer robots capable of “deleting humans like spam”. Musk is not alone in his cautious assessment. Nick Bostrom, in a recent UN briefing, echoes such sentiments when he warns that AI may well pose the greatest existential risk to humanity today, if current developments are any indication of what is likely to come in the future.[3] Other science and technology icons, like Stephen Hawkins and Bill Gates, have joined the chorus too, seeing new combinations of AI and advanced robotics as a grave source of insecurity going forward.

Statements like these betray not only a certain fatalism on the part of humans who have, in fact, invented, designed, and realised said autonomous machines; they also pose the question of whether the advancement of technology can indeed still be considered a human activity, or whether technology itself has moved into a sphere beyond human control and comprehension. Humans, as conceived by transhumanist discourses, are involved in a conscious process of perpetually overcoming themselves through technology. For tranhumanists, the human is “a work-in-progress”, perpetually striving toward perfection in a process of techno-scientifically facilitated evolution that promises to leave behind the ‘half-baked beginning[s]” of contemporary humanity. [4] Tranhumanism, however, is – as David Roden points out – underwritten by a drive to improve and better human life. It is, he notes, a fundamentally normative position, whereby the freedom to self-design through technology is affirmed as an extension of human freedom.[5] Transhumanism “is thus an ethical claim to the effect that technological enhancement of human capacities is a desirable aim”.[6] However, the pursuit of transhumanism through AI, NBIC[7] sciences, and computer technologies does not guarantee a privileged place for humans in the historical future. Rather, the on-going metamorphosis of human and machine threatens “an explosion of artificial intelligence that would leave humans cognitively redundant”.[8] In such a scenario, the normative position of transhumanism necessarily collapses into a speculative view on the posthuman, wherein both the shape of the historical future and the place of the human within this become an open question. Indeed, in the future world there may be no place for the human at all.

This perhaps un-intended move toward a speculative technological future harbours a paradox. First, the conception of science and technology as improving or outmoding the human is an inherently human construct and project – it is neither determined nor initiated by an non-human entity which demands or elicits submission based on their philosophical autonomy; rather, it is through human thought and imagination that this context emerges in the first place. The human is thus always-already somehow immanent in the technological post-human. Yet at the same time, it is the overcoming, at the risk outmoding, human cognition and functionality that forms the basic wager of speculative posthumanism.[9] Thus, while the posthuman future will be a product of human enterprise, it will also be a future in which the un-augmented human appears more and more as flawed, error-prone, and fallible. Contemporary techno-enthusiasm therefore carries within it the seeds of our anxiety, shame, and potential obsolescence as ‘mere humans’.

This new hierarchical positioning of the human vis-à-vis technology represents a shift in both. Put simply, the ‘creator’ of machines accepts a position of inferiority in relation to his or her creations (be these robots, cyborgs, bionic limbs, health apps, or GPS systems, to give just a few examples). This surrender relies on an assumed techno-authority of produced ‘life’ on the one hand, and an acceptance of inferiority – as an excess of the human’s desire to ‘surpass man’, to become machine – on the other. The inherently fallible and flawed human can never fully-meet the standards of functionality and perfection that are the mandate for the machines they create. And it is precisely within this hybridity of being deity (producer) and mortal (un-produced human) that an unresolved tension resides. Heidegger’s student and Hannah Arendt’s first husband, Günther Anders, has given much thought to this. His work extensively grapples with the condition that characterises the switch from creator to creatum, and he diagnoses this distinctly modern condition as one of ‘Promethean Shame”. It is the very technologisation of our being that gives rise to this shame, which implies a shamefulness about not-being-machine, encapsulating both awe at the superior qualities of machine existence, and admiration for the flawless perfection with which machines promise to perform specific roles or tasks. To overcome this shame, Anders argues, humans began to enhance their biological capacities, striving to make themselves more and more like machines.

The concept of shame is significant not simply as ‘overt shame’ – which is akin to a “feeling experienced by a child when it is in some way humiliated by another person” [10] – but also as an instantiation of being exposed as insufficient, flawed, or erroneous. This latter form of shame is concerned with “the body in relation to the mechanisms of self-identity”,[11] and is intrinsically bound up with the modern human-technology complex. To compensate, adapt to, and fit into a technologised environment, humans seek to become machines through technological enhancement, not merely to better themselves, but also to meet the quasi-moral mandate of becoming a rational and progressive product: ever-better, ever-faster, ever-smarter, superseding the limited corporeality of the human, and eventually the human self. This mandate clearly adheres to a capitalist logic, shaping subjectivities in line with a drive toward expansion and productivity. It is, however, a fundamentally technological drive insofar as functionality per se, rather than expansion or productivity, is the measure of all. Nowhere is this more starkly exemplified than in current relations between human soldiers and unmanned military technology.

Consider, for example, military roboticist Ronald Arkin’s conviction that the human is the weakest link in the kill chain. Such a logos – which is derived from the efficient and functional character as technology as such – suggests that the messy problems of war and conflict can be worked away through the abstract reasoning of machines. Arkin, one of the most vocal advocates of producing ‘ethical’ lethal robots by introducing an ‘ethical governor’ into the technology, inadvertently encapsulates both aspects of techno-authority perfectly when he asks: “Is it not our responsibility as scientists to look for effective ways to reduce man’s inhumanity to man through technology?” For Arkin, the lethal robot is able to make a more ethical decision than the human, simply by being programmed to a use a pathway for decision-making based on abstracted laws of war and armed conflict. The human, in her flawed physiological and mental capacity, is thus to be governed by the (at least potential) perfection of a machine authority. This is by no means a mere brainchild of outsider techno-enthusiasm – quite the contrary: the US Department of Defense (DoD) is an active solicitor of increasingly intelligent machines that, one day soon, will be able to “select and engage targets without further intervention by a human operator”, and will possess the reasoning capacity needed to “assess situations and make recommendations or decisions”, including, most likely, kill decisions.

Leaving the heated debate about Lethal Autonomous Weapons Systems (LAWS) – or Killer Robots – aside for a moment, this logic is testament to the Promethean Shame detected by Anders half a century prior. In his writings, Anders astutely realised the ethical implications of such a shift in hierarchical standing. As Christopher Müller notes in his discussion of Anders’ work, the contemporary world is one in which machines are “taking care” of both functional problems as well as fundamentally existential questions; “[i]t is hence the motive connotations of taking care to relieve of worry, responsibility and moral effort that are of significance here”. It is in such a shift toward a techno-authority that ethical responsibility is removed from the human realm and conceived of instead in techno-scientific terms. Ethics as a technical matter “mimes scientific analysis; both are based on sound facts and hypothesis testing; both are technical practices”.[12] I address this problem of a scientifically informed rationale of ethics as a matter of technology elsewhere. What I would like to stress here, though, are the possible futures associated with this trajectory.

The speculative nature of posthumanism requires that we have some sense of imagination as to how our humanity might comprehensively be affected by technology. A challenge in modern thinking about technology was the apparent gap between the technology we produce and our imagination regarding the uses to which this technology is put. For Anders, there is a gap between product and mind, between the production (Herstellung) of technology and our imagination (Vorstellung) regarding the consequences of its use.[13] Letting this gap go unaddressed produces space for a technological authority to emerge, wherein ethical questions are cast in increasingly technical terms. This has potentially devastating implications for ethics as such. As Anders notes, the discrepancy between Herstellung and Vorstellung signifies that we no longer know what we do. This, in turn, takes us to the very limits of our responsibility, for “to assume responsibility is nothing other than to admit to one’s deed, the effects of which one had conceived (vorstellen) in advance”. And what becomes of ethics, when we can no longer claim any responsibility?

Notes

[1] See David Roden, Posthuman Life: Philosophy at the Edge of the Human (Abingdon: Routledge, 2015). Roden engages with the various discourses on ‘posthumanism’ today, going to great lengths to highlight the differences between various kinds of ideas that are attached to the term. While these are relevant in the wider context of this article, I lack the space to engage with them in full here.

[2] Peter Singer, Wired for War: The Robotics Revolution and Conflict in the 21st Century (London: Penguin, 2009), p. 415.

[3] Nick Bostrom, ‘Briefing on Existential Risk for the UN Interregional Crime and Justice Research Institute’, 7 October 2015.

[4] Nick Bostrom, ‘Transhumanist Values’, Journal of Philosophical Research 30:Supplement, (2005) pp. 3-14, at 4

[5] Roden, Posthuman Life, pp. 13-14.

[6] Ibid., p. 9.

[7] The ‘NBIC’ suite of technologies comprises nanotechnology, biotechnology, information technology and cognitive sciences.

[8] Roden, Posthuman Life, p. 21.

[9] At this point we can clarify the differences between humanism, transhumanism, posthumanism, and the posthuman. By ‘humanism’ I mean those discourses and projects that take some fixed idea of the human as their natural centre, and by ‘transhumanism’ I mean those that grapple with or aim at an active technical alteration of the human as such. I reserve the term ‘posthumanism’ for those discourses that seek to think a future in which the technical alteration of the human has given rise to new forms of life that can no longer properly be called human. The ‘posthuman’ is a name for these new, unknown forms of life.

[10] Anthony Giddens, Modernity and Self-Identity: Self and Society in the Late Modern Age (Cambridge: Polity, 2003), p. 65.

[11] Ibid., p. 67.

[12] Donna Haraway, Modest_Witness@Second_Millennium. FemaleMan©_Meets_OncoMouseTM (London: Routledge, 1997), p. 109.

[13] Günther Anders, Endzeit und Zeitende (München: C.H. Beck, 1993).

Further Reading on E-International Relations

- Historical and Contemporary Reflections on Lethal Autonomous Weapons Systems

- A Critique of the Canberra Guiding Principles on Lethal Autonomous Weapon Systems

- Introducing Guiding Principles for the Development and Use of Lethal Autonomous Weapon Systems

- Gandhi and the Posthumanist Agenda: An Early Expression of Global IR

- Combating Eurocentrism and Reinscribing Imperialist Cartography in African Scholarship

- Securitizing ‘Bare Life’? Human Security and Coronavirus