This article is part of a series.

The legal, security and diplomatic communities are being challenged to assess the impact on the conduct of future warfare brought about by the advent of lethal autonomous weapon systems (LAWS). The novelty of the military capabilities that LAWS could provide states will test the foundations of international humanitarian law, military doctrine, and applied ethics depending on how these systems are developed and fielded. The Canberra Working Group seeks to provide guiding principles within those categories for the development and use of LAWS in conflict to ensure that emerging systems do not undo those foundations. One compelling reason states seek the addition of autonomous capability into the functions of weapon systems is because it offers the potential of a machine being able to react to complicated inputs in a desired way when communication and direct control are infeasible. That type of capability offers obvious military utility to states in terms of being able to operate faster, in more environments, and with different risk parameters to its own forces. This paper sets in context the accompanying ‘Guiding Principles for the Development and Use of Lethal Autonomous Weapon Systems’ by highlighting the following: outcomes of previous international discussions concerning new military technologies; key aspects of ongoing discussions between governments; and a potential way forward in the event of political stalemate at the United Nations (UN) Group of Governmental Experts (GGE) on LAWS.

Background

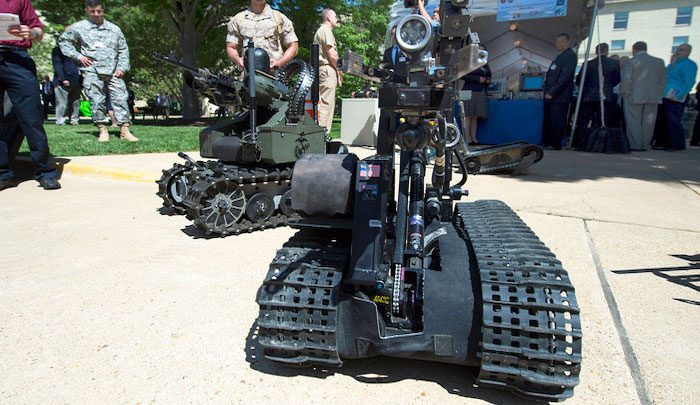

The potential for developing autonomous weapon systems [1] has increased in recent decades as a result of advances in computing, artificial intelligence (AI), remote sensing and a host of other technological advances. In 2014 the International Committee of the Red Cross (ICRC) convened a gathering of governmental representatives and specialists across a range of fields in an ‘Expert Meeting on Autonomous Weapon Systems: Technical, Military, Legal and Humanitarian Aspects’ in Geneva. Against this backdrop, the UN has been formally discussing the development and use of lethal autonomous weapon systems (LAWS) since the first meeting of the GGE of the High Contracting Parties to the Convention on Certain Conventional Weapons (CCW) in November 2017. The political landscape of the GGE on LAWS is complex and contested. A range of activities occur alongside the discussions between states, from education to advocacy. The Campaign to Stop Killer Robots is perhaps the most well-known of the latter organisations.

There are, broadly speaking, three potential outcomes of international negotiations at the CCW concerning the advent of new weapons. First, an outright preemptive ban could be agreed between states. This was attempted in response to early advances in military aircraft capabilities at The Hague Peace Conference in 1922-23 and at the Geneva Disarmament Conference of 1932-34, where states met to discuss outlawing bombing from aircraft. However, in those talks the strategic interests of the major industrialised countries prevailed and no agreement was reached. Air power historian Peter Gray described the outcome of the former as ‘a political and legal failure’, even if the advent of bombing from the air enabled the UK, for example, to more cost-effectively police its then-Empire. These and more recent international discussions have occurred because no major military technology has ever been uninvented: not nuclear, biological or chemical weapons, area denial bombs or massive vacuum bombs.

A second possibility is a legally binding international treaty that sets out agreed parameters within which autonomous weapons can be developed and used. The Hague (1899 & 1907) and Geneva (1949) Conventions framed certain restrictions on the methods and means of war, and subsequent treaties have laid out specific prohibitions. For example, there are treaties addressing intentionally harmful weapons like Blinding Lasers, which happens to be the only example of a preemptive ban, or indiscriminate weapons such as the 1999 Ottawa Convention which bans landmines. Despite the existence of this latter treaty, it does not mean that there is a complete landmine ban, and for two key reasons. Not every state is a signatory to the Ottowa Convention. The major military powers of the US, China and Russia are not party to the Ottawa Convention and will not be bound by it. However, the situation is more nuanced: in 2014 the US announced that it would observe the terms of the anti-personnel landmine ban except for on the Korean Peninsula. Neither China nor Russia have taken similar steps. The other reason is that non-state groups cannot be party to international treaties.

The third possibility is that competing national interests around LAWS will result in political stalemate at the GGE, which would lead to a non-binding political statement or to a position of permanent stasis. The CCW is a consensus-based body and thus far the states’ parties have only agreed to extend talks on the topic of LAWS over successive years.

Ongoing Discussions

The three proposed potential outcomes of international discussions correspond to positions that are emerging in the ongoing GGE on LAWS meetings. Representatives of some states advocate for an outright ban on the development and use of LAWS. This group is made up particularly of smaller states and those from the global South. This position is supported by a number of civil society organisations engaged in the discussion. In contrast, none of the major powers – the US, China or Russia – has indicated that they would support such an outright ban and precedent with other weapon systems suggests that this is likely to continue to be the case. Major powers appear reluctant to impose any limits on these systems beyond what international law already prescribes.

A third grouping are in favour of finding a middle way. Here proposed responses fall short of new international law but go beyond simply accepting the status quo of potentially unconstrained development of autonomous weapons. One example is a joint proposal put forward by France and Germany in 2018. They advocated that states should join together in issuing a political declaration. The statement would articulate a range of agreed principles but would not be binding in international law. Separately, some individual technologically advanced states have set out official positions on LAWS. For example, the British position was stated in a Parliamentary debate on Lethal Autonomous Robotics: ‘[T]he Government of the United Kingdom do not possess fully autonomous weapon systems and have no intention of developing them…As a matter of policy, Her Majesty’s Government are clear that the operation of our weapons will always be under human control as an absolute guarantee of human oversight and authority and of accountability for weapons usage.’

A Way Ahead

If discussions at the UN GGE on LAWS head towards stalemate, with neither a ban nor a legal treaty emerging, one possible way forward would echo previous international responses to cover the growing use of private military contractors in armed conflict. In 2005 the UN established a Working Group to investigate the use of mercenaries ‘as a means of violating human rights and impeding the exercise of the rights of peoples to self-determination’. A number of international diplomatic efforts followed but no ban on private military contractors was achieved. More successful was a joint endeavour by the Swiss Government and the International Committee of the Red Cross. They brought together a group of states and international experts to address this topic, with a key issue explicitly excluded from the deliberations: the question of the legitimacy and advisability of using private military contractors in armed conflicts. The group began by articulating a restatement of relevant international law, then outlined good practices for states choosing to employ private military companies during armed conflict. The guide which resulted from these deliberations is known as the Montreux Document, which does not have the status of a legal treaty but which has become very influential.

Given the potential for impasse over future legal limits on autonomous weapons, the Montreux approach offers a template for an alternative way ahead. In April 2019 an independent group of experts – ethicists, international lawyers, technologists and military practitioners – met in Canberra and set aside the question of LAWS’ legitimacy and desirability to discuss practical options. By August 2019 they had produced a starting list of Guiding Principles for the Development and Use of LAWS, to begin to articulate what good international practice might look like. The Guiding Principles are published in full alongside this paper and address:

- Principle 1. International Humanitarian Law (IHL) Applies to LAWS

- Principle 2. Humans are Responsible for the Employment of LAWS

- Principle 3. The Principle of Reasonable Foreseeability Applies to LAWS

- Principle 4. Use of LAWS Should Enhance Control over Desired Outcomes

- Principle 5. Command and Control Accountability Applies to LAWS

- Principle 6. Appropriate Use of LAWS is Context Dependent

AI and other technological advances will enable weapons to be developed with increasingly sophisticated levels of autonomy. The world therefore faces three choices: a legally-enforced ban, unlimited development and use, or some form of more limited code which may, over time, contribute to the development of international norms around LAWS. The Guiding Principles are a starting point for discussing how autonomous weapons might be somewhat constrained and what ‘good’ practice might look like. It is an imperfect approach in an imperfect world. But it might be the only practical way ahead.

Further Reading on E-International Relations

- A Critique of the Canberra Guiding Principles on Lethal Autonomous Weapon Systems

- Historical and Contemporary Reflections on Lethal Autonomous Weapons Systems

- Guiding Principles for the Development and Use of LAWS: Version 1.0

- ‘Effective, Deployable, Accountable: Pick Two’: Regulating Lethal Autonomous Weapon Systems

- Artificial Intelligence, Weapons Systems and Human Control

- How Autonomous are the Crown Dependencies?